Chongyan Chen 陈崇彦

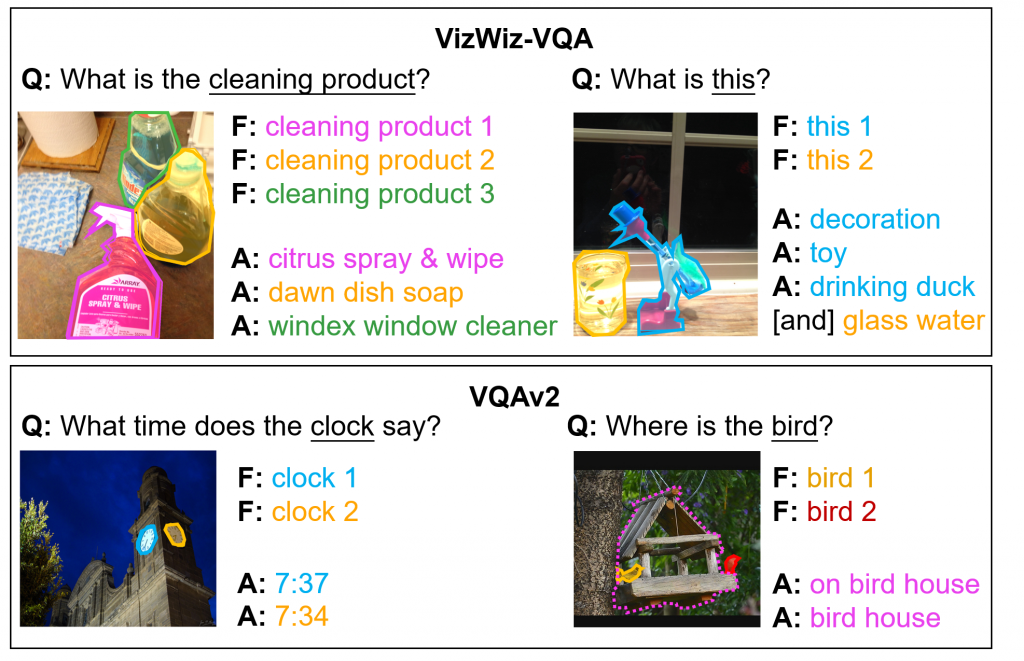

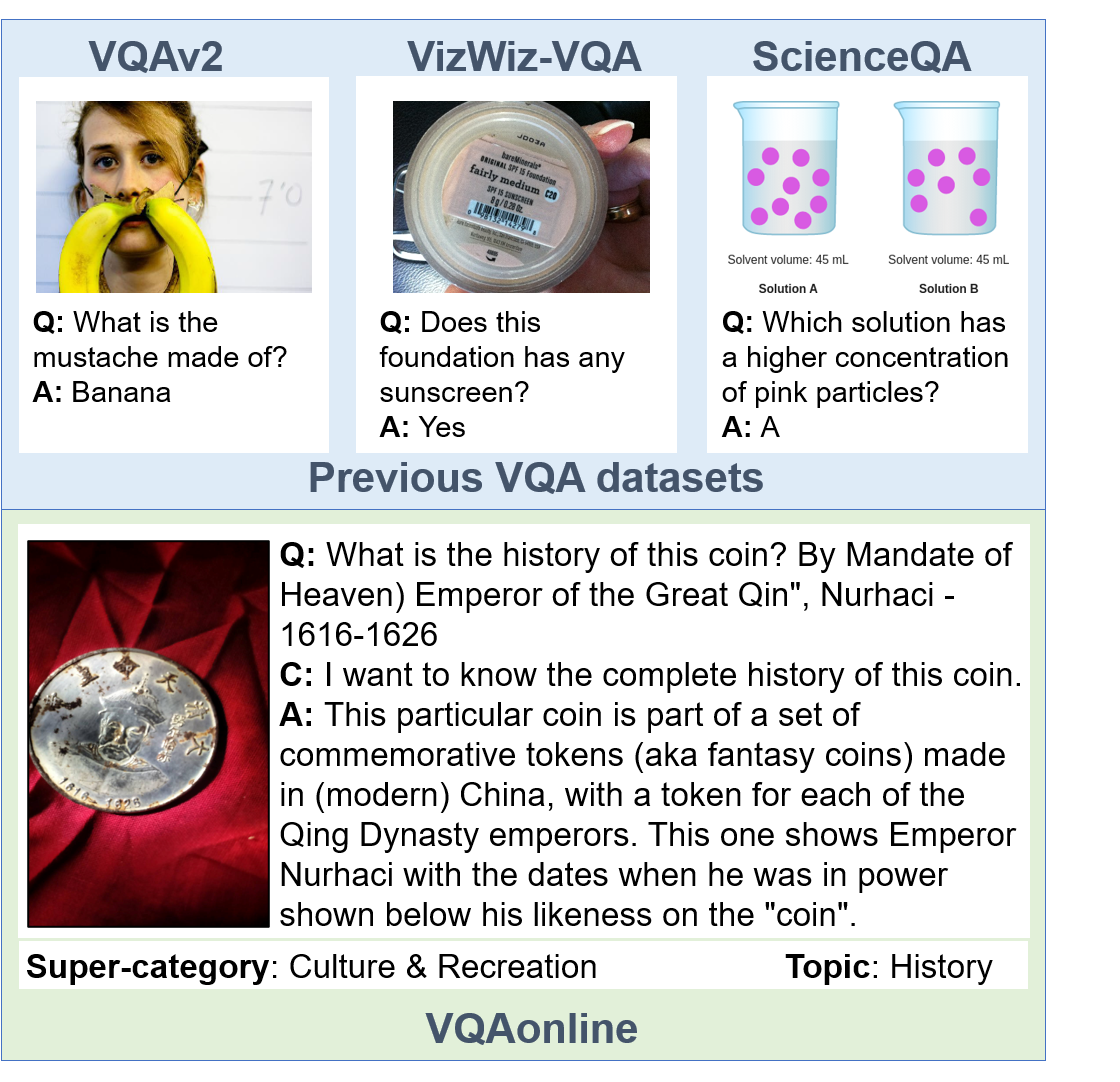

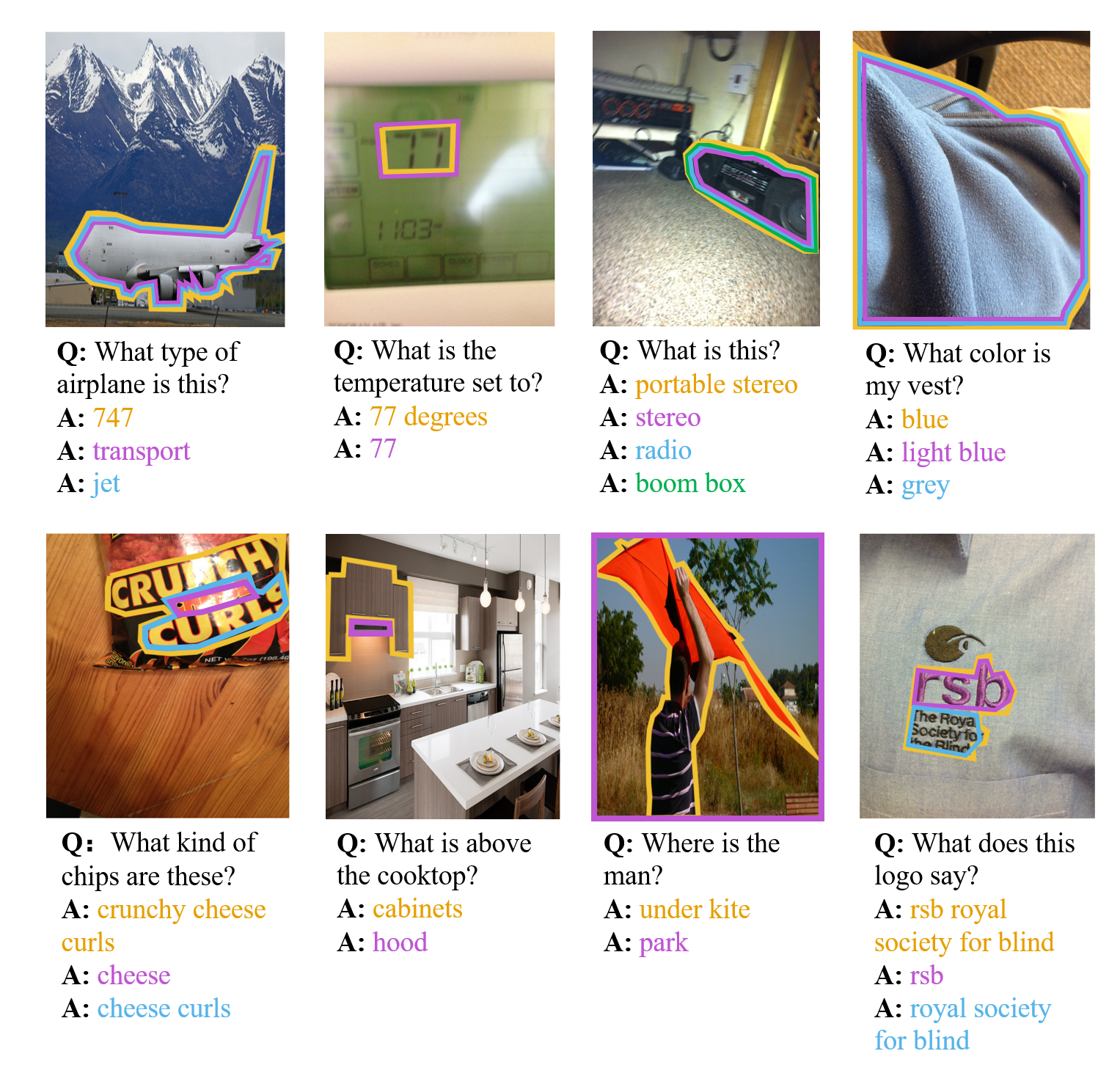

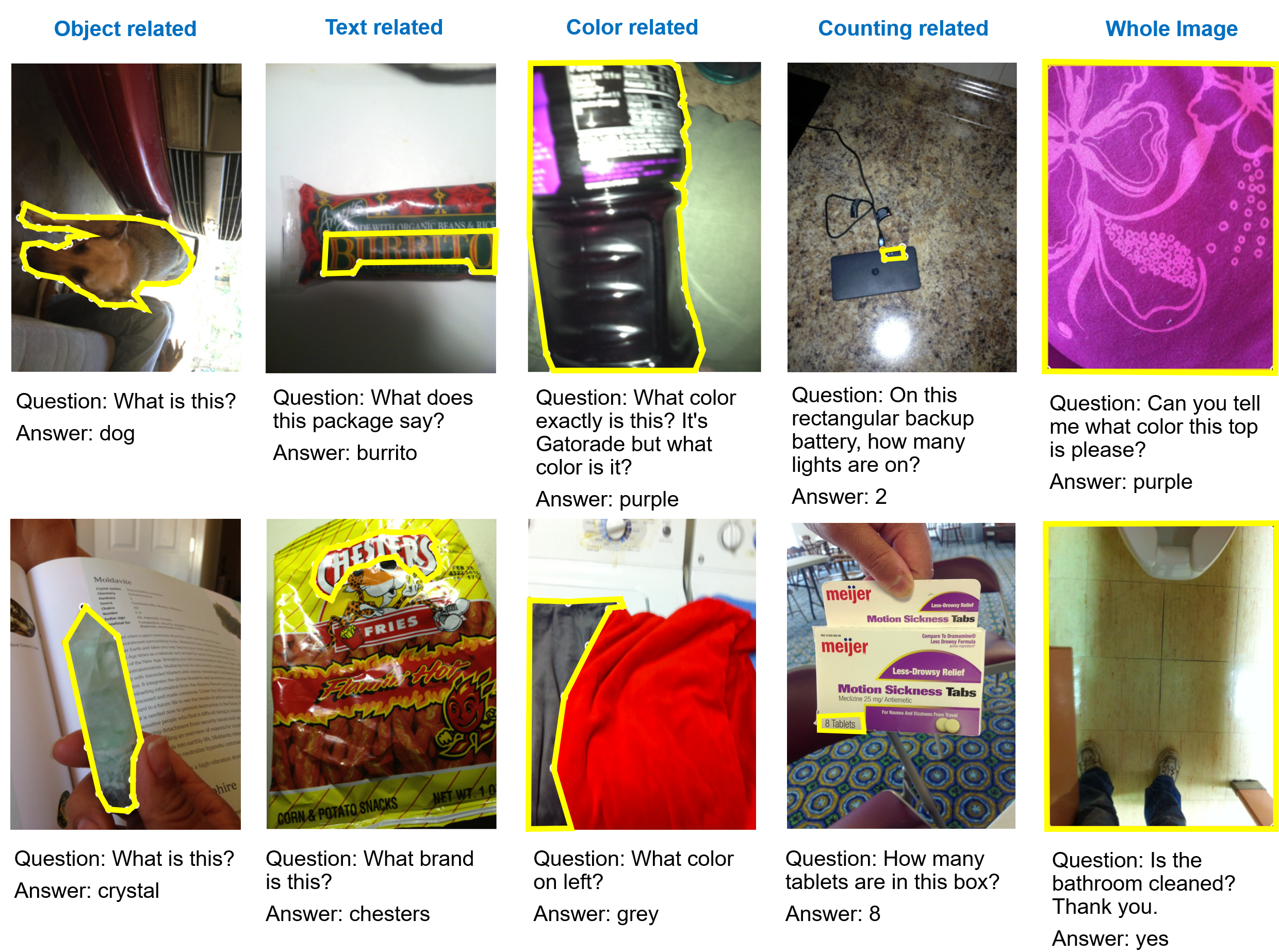

Hi there! I am a research scientist at Google DeepMind. I received my Ph.D. from UT Austin, where I was advised by Prof. Danna Gurari. My research centers on inclusive and accessible visual question answering in authentic use cases. Currently, I am exploring visual questions that require lengthy answers that can accommodate diverse perspectives. Generally, I take great pleasure in redefining ill-posed problems, delving into data to discover insights, and designing algorithms to address challenges.

In my free time, I enjoy drawing and playing the violin. I also write science articles on social media, and I'm the founder of a vision and language discussion group with over 200 researchers who have joined.